In the quest to build robots that interact smoothly with the physical world, touch sensing has long remained a frontier. The recent work Sparsh-X, produced by Meta FAIR in collaboration with academic partners, marks a significant step toward making touch a first-class perceptual modality for robots.

The Problem Space: Why Touch Matters

Consider a robot trying to pick up a delicate object (say, a light bulb), or manipulate the inside of a socket (plug insertion). Without tactile awareness, the robot either errs by applying too much force, slipping, or misjudging contact. Human hands, meanwhile, blend vision with rich tactile feedback pressure, vibration, micro‐deformations, and motion cues to sense the environment in real time.

If robots are to approach this finesse, they need:

- High-bandwidth, rich tactile sensors

- Smart models that fuse multiple modalities

- General-purpose representations enabling downstream tasks (e.g. grasping, in-hand manipulation)

That is exactly the niche Sparsh-X tries to fill.

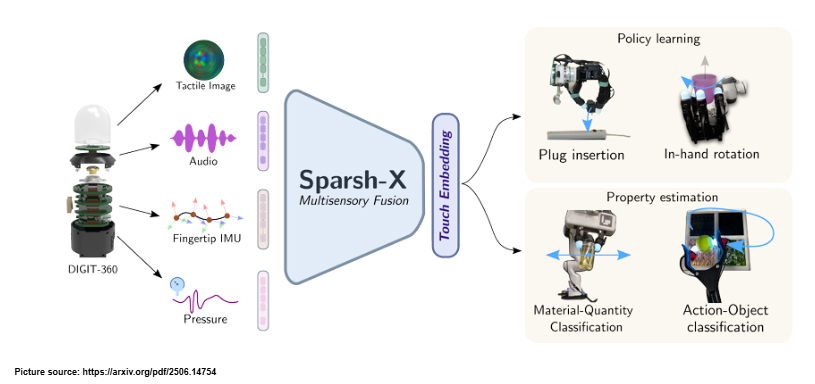

What Is Sparsh-X and How It Works

Picture source: https://arxiv.org/pdf/2506.14754

Sensor Platform: Digit 360

Sparsh-X is built atop Digit 360, Meta’s advanced artificial fingertip. Key facts about Digit 360:

- It captures multimodal tactile data: high-resolution tactile images, contact microphone (acoustic vibration), IMU (motion), and pressure sensors.

- It has more than 18 unique sensing features and can detect spatial detail down to ~7 microns and forces as small as 1 mN.

- Its internal “taxel” grid (tactile pixel-like units) is dense and omnidirectional, allowing nuanced contact mapping.

- The sensor is real-time capable processing touch at a rate that can greatly exceed human reaction times.

By capturing multiple sensory streams during contact-rich interactions, Digit 360 provides the raw “touch canvas” on which Sparsh-X builds.

Model Architecture & Training

Sparsh-X is a transformer-based encoder that fuses the four modalities (image, audio, motion, pressure) into a unified latent embedding.

Here’s a breakdown:

- Per-modality encoding

Each modality is first processed independently through several transformer layers (self-attention, feed-forward) to generate modality-specific embeddings. - Cross-modal fusion via bottleneck tokens

Sparsh-X introduces a set of bottleneck fusion tokens that connect all modalities. The fusion tokens link representations across modalities and facilitate cross-modal information flow. After each cross-modal update, the fusion tokens are averaged to encourage shared information. - Self-supervised (SSL) training

The model is trained using self-distillation or DINO-style objectives (without requiring explicit ground-truth labels) over ~1 million recorded contact-rich interactions.

By doing so, Sparsh-X learns a multisensory touch representation that captures higher-level, physically meaningful embeddings.

Data Collection & Scale

Sparsh-X’s training corpus draws from two main sources:

- An Allegro robotic hand, each fingertip fitted with Digit 360, performing random object interactions (sliding, tapping, dropping)

- A manual picker rig using the same sensor to perform atomic manipulations (pick, place, slide, drop) over different surfaces and object types

In total, the dataset spans approximately 1 million contact-rich interactions across diverse materials, textures, motions, and surfaces.

Such scale and diversity enable robustness and generalization across tasks.

In real tasks:

- In a plug insertion imitation learning experiment, policies augmented with Sparsh-X representations outperformed those relying solely on tactile images.

- In in-hand manipulation (e.g. rotation), using Sparsh-X representations enables better adaptation to slip, weight perturbations, and surface changes.

These results strongly suggest multisensory fusion is far superior to single-modality pipelines for rich tactile perception.

Applications & Impact

The capabilities unlocked by Sparsh-X and Digit 360 open doors in multiple domains:

Robotics & Manipulation

Robots can better grasp, handle, and manipulate delicate or complex objects. Tasks like inserting connectors, inserting wires, or assembly in confined workspaces become more reliable with tactile nuance.

Virtual Reality & Haptics

In VR or AR systems, one can imagine rendering touch sensations more faithfully by leveraging embeddings that encode physical properties. This could lead to more immersive and realistic tactile feedback.

Assistive & Rehabilitation Technology

For users with sensory impairments, instruments capable of interpreting touch can augment feedback e.g. prosthetics with tactile “feeling” or robotic aids with refined touch sensing.

Healthcare & Telemedicine

Remote palpation or tactile sensing could improve diagnostics or rehabilitation exercises delivered remotely, especially when integrated with robotic interfaces.

Challenges, Limitations & Open Questions

While Sparsh-X is a breakthrough, it also surfaces critical challenges and caveats:

- Domain shift & generalization

The training dataset, while large, may not cover all object types, textures, or environmental conditions (e.g. extreme temperatures, moist surfaces). Generalization to new domains remains nontrivial. - Latency & real-time constraints

Fusion of multiple high-bandwidth modalities and transformer processing introduces computational demand. Ensuring real-time responsiveness in embedded systems is a challenge. - Sensor calibration & noise

Tactile sensors are sensitive to calibration drift, wear and tear, and environmental noise (vibrations, friction). Robustness under mechanical degradation is vital. - Interpretability of embeddings

The internal embeddings, while powerful, may lack direct interpretability. Understanding why a particular tactile embedding leads to a certain inference is non-trivial. - Data collection scaling & labeling

Although Sparsh-X uses self-supervision (avoiding costly manual labels), collecting high-quality multisensory interaction data at scale remains expensive and time-consuming. - Comparisons to alternative modalities

It remains to be seen how tactile fusion approaches compare or complement vision + depth + force fusion pipelines. In some tasks, vision may dominate; in others, touch is indispensable. - Edge deployment & power constraints

For robotic hands or mobile agents, energy, memory, and compute budgets are limited adapting Sparsh-X to lightweight (pruned/quantized) variants is a potential research direction.

Future Directions & Extensions

- Adaptive fusion & occlusion handling

One interesting path is modality dropout the model should gracefully degrade if one sensor fails or is occluded. - Continual learning / lifelong touch adaptation

As a robot interacts with new objects, can Sparsh-X refine itself online, handling drift and novel surfaces? - Cross-sensor generality

Extending beyond Digit 360 to other tactile sensors (e.g. magnetic skins, resistive tactile arrays) through transfer learning or domain adaptation. - Integration with vision and language

Fuse tactile embeddings with visual perception and high-level reasoning (e.g. language instructions) for holistic embodied agents. - Lightweight real-time variants

Pruning, quantization, or distillation to run on embedded controllers or FPGA/ASIC platforms. - Explainable tactile reasoning

Tools to visualize which tactile features, modalities, or temporal slices drove certain decisions improving trust and debugging.

Conclusion

Sparsh-X and Digit 360 mark a leap forward in tactile AI: bringing nuanced, multimodal sensory fusion to robotic fingertips. By combining images, audio vibrations, motion, and pressure signals, Sparsh-X generates representations that significantly outperform single-modality baselines boosting task success, robustness, and material/force inference accuracy.

Yet, like all frontier research, it opens as many questions as it answers: how to generalize, deploy in real time, interpret embeddings, and scale to new settings. For anyone exploring AI-guided robotics or touch-aware systems, Sparsh-X is a milestone but it is also a springboard for future innovation.